Forever Your Partner (FYP): Supporting Immersive Viewer Engagement with Virtual YouTubers

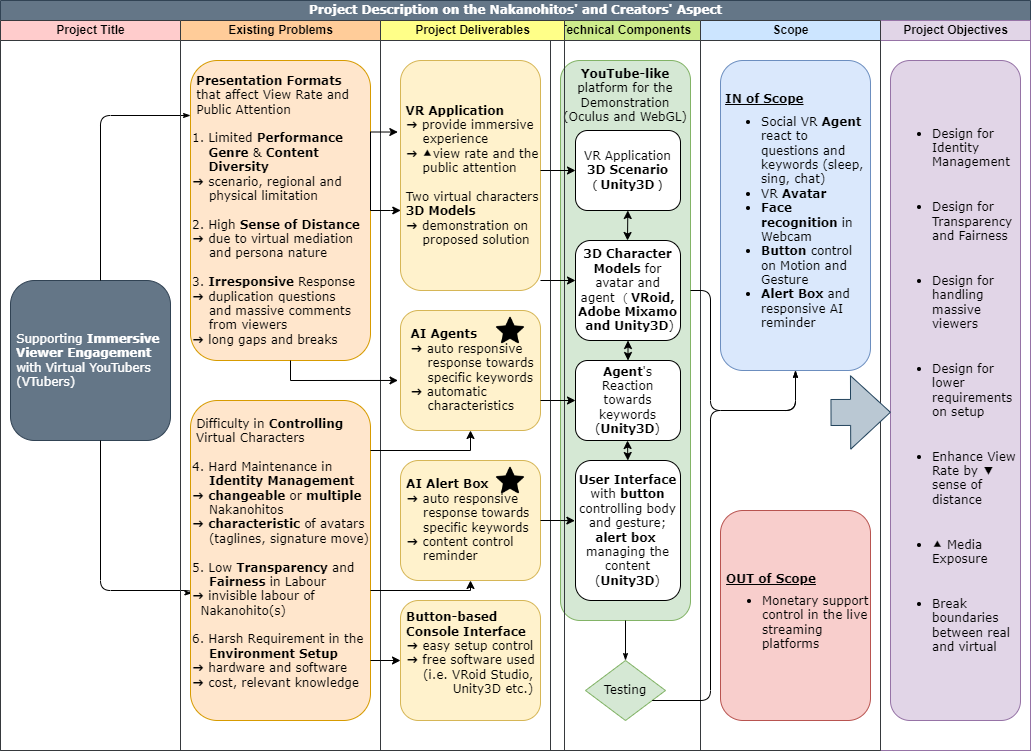

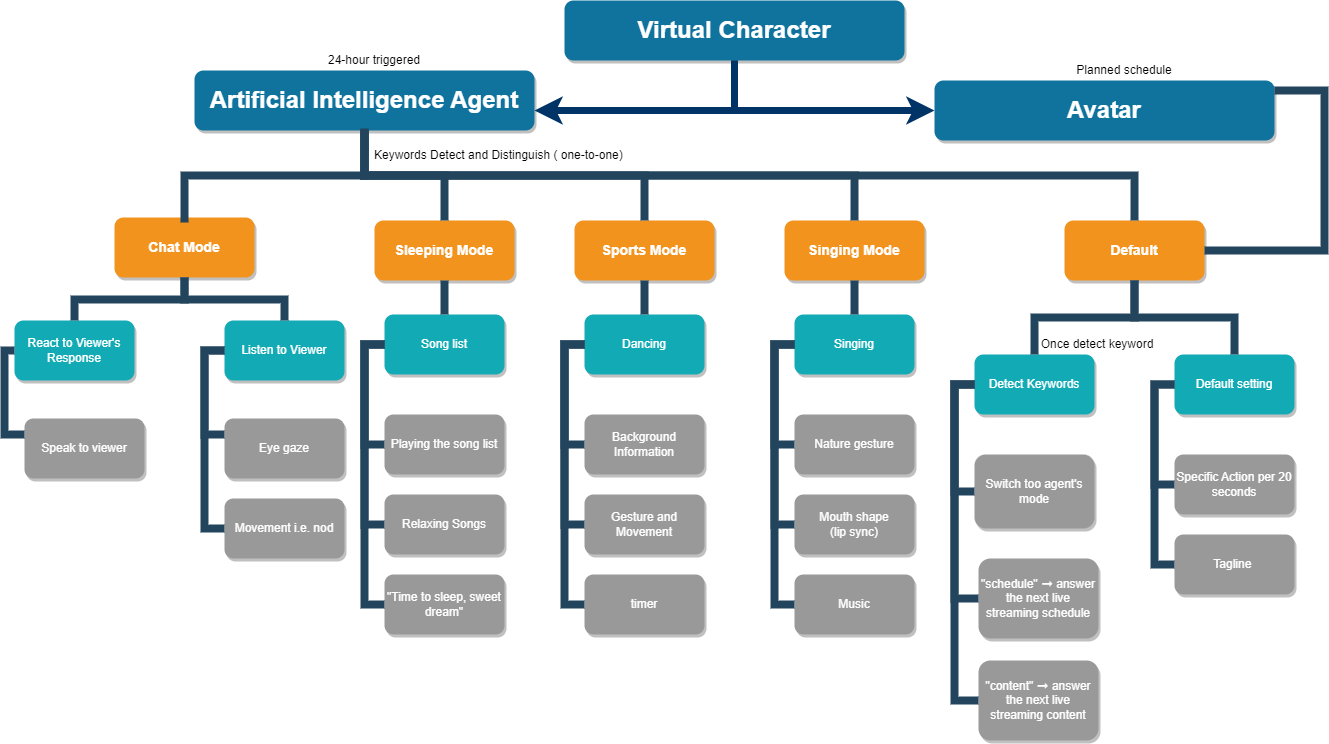

ABSTRACT: Since late 2016, the public launch of virtual influencers foreshadows sensation soon after its release, receiving critical acclaim worldwide. Recently, one of the remarkable VTuber, Kizuna AI was selected as one of Asia's top 60 influencers. This highlights the potentiality of this field with the advancements of skyrocketing technology. Influenced by the big market share of ACGN (anime, comic, game, novel) culture, Vtubers' behaviors are more acceptable than real-person YouTubers. Many audiences are motivated to engage with virtual Youtubers (VTubers) as they would like to interact with favorite anime characters. In consequence, VTubers can provide relaxation and entertainment for viewers. Yet, a higher sense of distance is found between viewers and virtual avatars contrary to the variety of video genres in traditional streamers. Whereas VTubing has limited genre and content diversity under its performing style, Nakanohitos can hardly build unintuitive content under identity management. These directly affect the performance and quality of VTubing. This bottleneck leaves unsolved consequences for supporting immersive viewer engagement with VTuber. This paper proposes a novel design in creating agents to share the workload of Nakanohitos and an innovative user interface in virtual reality. Significantly, possible measurements and functionalities in virtual live streaming are designed for assisting the management of characters' persona in order to match the viewers' expectations.

REMARK: My aspect, as one of the two sections in our project, is in the point of view of the VTuber / video creator. My friend, Kaiyuan LIU Angela, is responsible for the viewer perspective. Please refer to Github Link for Angela's project. Here I focus on the explanation of my section.

TOOLS USED

Live2D

VTuber Studio

VRoid Studio

Unity 3D

iPad Procreate

Figma

Adobe XD

Adobe AI, PS

Adobe Premier Pro

LateX

TIMELINE

Stage 1 (Propose):

Aug 2021 - Oct 2021

Stage 2 (2D):

Dec 2021 - Feb 2022

Stage 2 (3D):

Feb 2022 - Apr 2022

Stage 4:

Dec 2022 - present

SUBJECT AREA

Human Computer Interaction

Multi-modal HC Interface

Virtual Reality

SHOWCASE

Human Computer Interaction

Multi-modal HC Interface

Virtual Reality

Project Introduction

Work Breakdown Structure forecasts the organizational functions and scope in this project

Solution

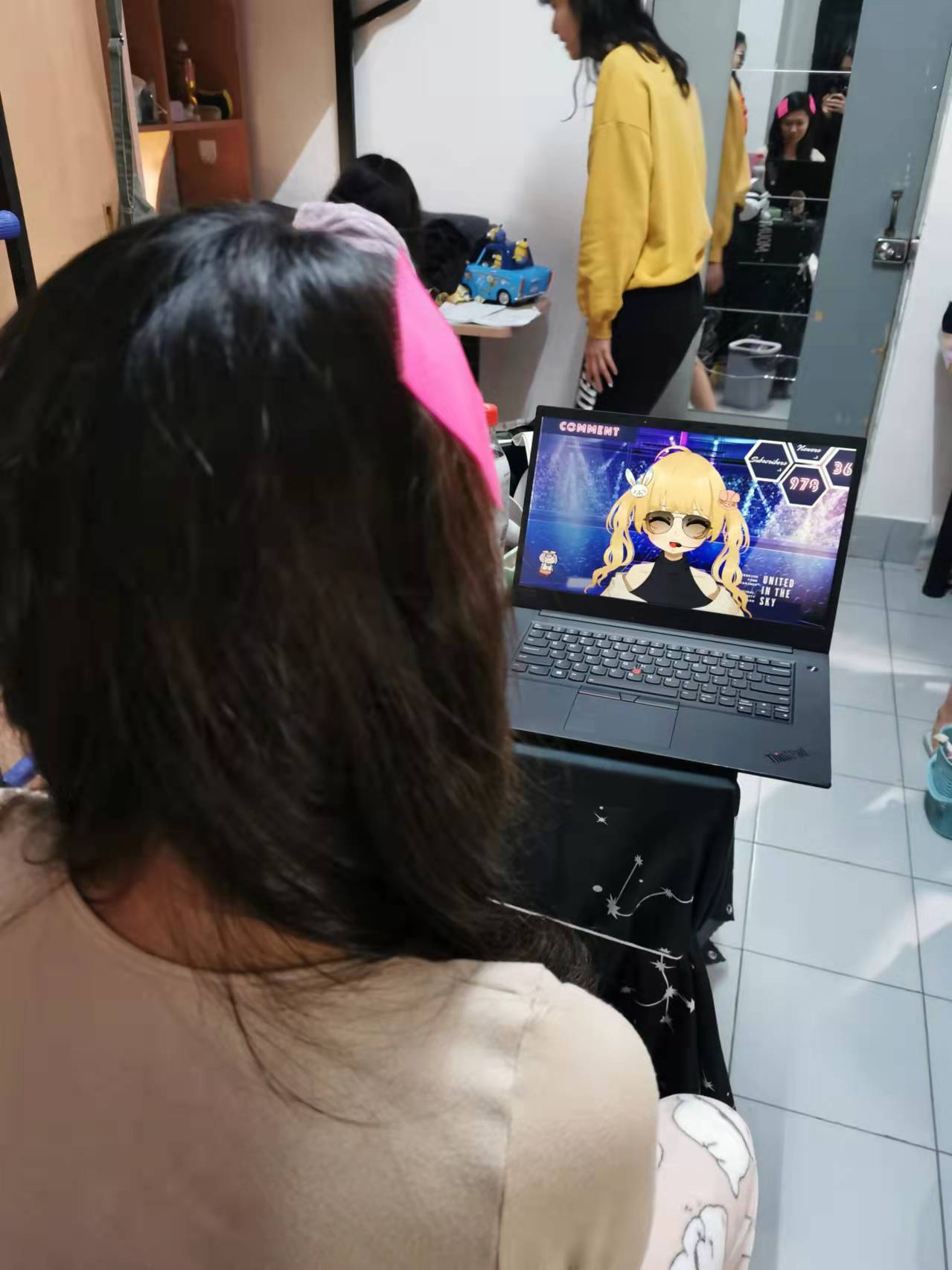

I first created a 2D avatar via Live2D and VTube Studio

Here I created my 3D agent using VRoid and Unity3D

Movement and Body Language, Camera Detection and Gesture Control, AEIOU and Lip Sync, Facial Expression

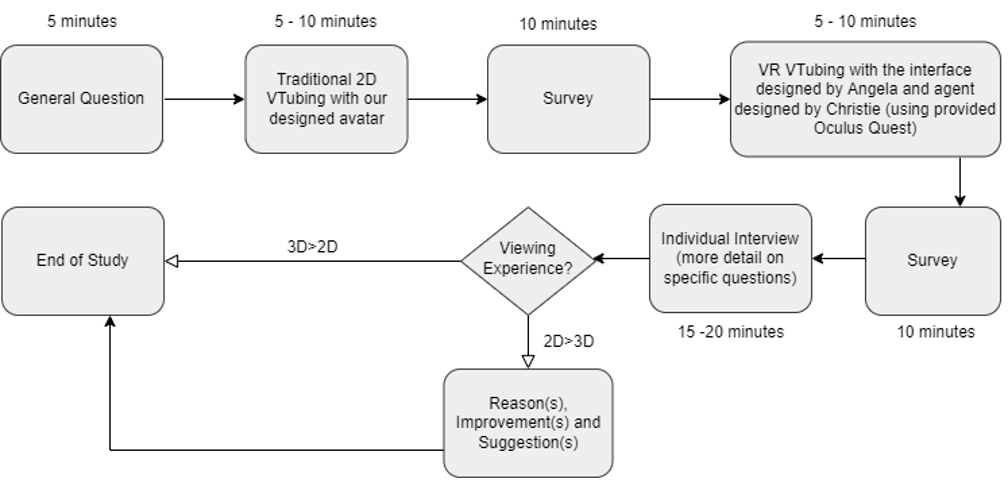

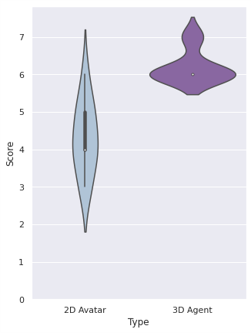

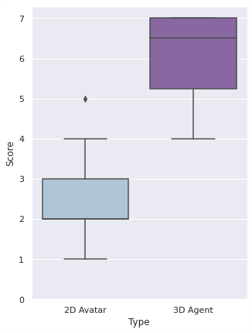

Test and Evaluation Highlights